AI is the hot topic of the moment, and yet … sensitive information from a robot vacuum cleaner, taken for machine learning purposes, has been shared on the internet in a horrifying breach of privacy.

In the age of digital devices, it’s easy to forget that our private spaces are constantly being monitored, whether we realise it or not.

We all know if we speak about wanting a holiday, we will get ads for cruises all up in our grill on our social media. This has creeped us all out for quite some time. From our phones to our internet-connected fridges, we are constantly feeding data back to companies. But who has access to it? It turns out, a lot of randos do.

And now, things have taken a dark turn via our robot slaves. They and their bedfellows are turning on us.

AI data privacy: Why we should be worried

According to the MIT Technology Review, a series of extremely personal images surfaced in online forums. These images were captured by iRobot’s Roomba J7 series robot vacuum. You know those little robot vacuum cleaners that make your floors look immaculate, then go back to their nest to charge? Ideally, we would love these, as they save us time and are free employees who don’t need breaks to vape and eat.

In an effort to make these machines work better, their designers have been using them to take images and video in the homes of people they were placed in (these people agreed to let these vacuums into their homes for research purposes).

The footage was then uploaded to Scale AI, which hires workers to label audio clips, photos, and videos, to explain to the AI what they are. This information is then used to train artificial intelligence.

All good in theory. Until it wasn’t.

What happened?

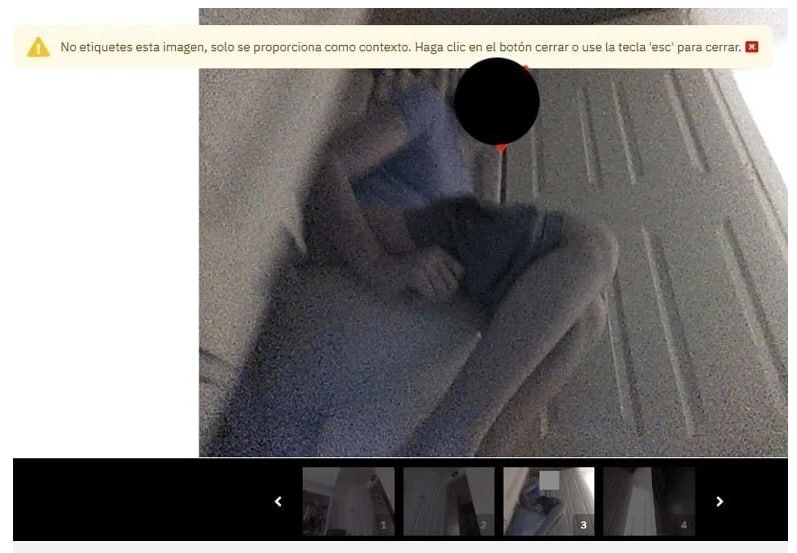

The AI images from the Roomba were not just of furniture, as was the original intention, but they included pictures of people in intimate settings. One image was of a woman on the toilet, which as you can imagine, is not the kind of information she wanted out there.

If the picture produced by the Roomba had have remained in the network of data labellers, that would not have been a big deal. But of course, humans being humans, someone took the photo of the woman doing her business, and put it into a private group chat. From there, it has ended up all over the internet.

iRobot, the world’s largest vendor of robotic vacuums, confirmed that these images were captured by its Roombas in 2020. They were taken by special development robots with hardware and software modifications that are not and never were present on iRobot consumer products for purchase, the company said in a statement.

Data privacy is a big deal

This incident presents us with our worst fears when it comes to data privacy. That is, even if we understand the terms and conditions, and agree to them, things can go wrong. Very wrong.

Internet of Things (IoT) companies have access to our personal lives, and it is crucial that they have strict storage and access controls in place to protect our privacy. We just want a cleaner home, not pics of us with our pants down online. Unfortunately, the data that we feed back to these companies can end up in the hands of third parties, who we don’t have an agreement with.

The technology that we use in our homes should make our lives easier, not humiliate us. It’s hard to imagine a more private moment than going to the smallest room in the house. The thought of a robot recording this and uploading video of it is wildly dystopian in the true sense.

While we love what AI can do for us, and we need for these machines to learn, we also need to trust the companies that are doing this to not be total douchebags with our privacy.

AI vacuums and machine learning

iRobot, the company behind the Roomba J7 series, has shared over two million images with Scale AI and likely even more with other platforms. So, we need to talk about the points at which personal information can leak out.

According to the MIT article, iRobot collects over 95% of its image data from real homes. Employees or volunteers agree to allow iRobot to collect AI data, including video streams, as the devices are running. This is in exchange for incentives for participation which we assume means payment.

iRobot has also recently asked buyers of the vacuums to opt-in to contribute to training data through its app.

While we understand the plight of AI researchers trying to train these devices (and who doesn’t want a robot to vacuum their home? What a great toy!) the data privacy concerns are very, very real and have the potential to deeply embarrass us if it goes horribly wrong.

This is the wild west of technology, and as we are at the starting block, we need to get this right. Maybe it is time for a rethink.