A latest study published in Psychological Science reveals that AI-generated human faces representing white people are perceived as more ‘real’ compared to faces of people of colour.

The study, conducted by psychology researchers from Australia, Canada, and the United Kingdom, asked participants to distinguish between real photos of humans and fake ones that were generated with AI. In the end, researchers found that “white AI faces are judged as human more often than actual human faces” – a phenomenon that they described as “AI hyperrealism”.

AI-generated faces

For the study, researchers recruited 124 adults aged 18–50, all of whom were white. As part of the experiment, participants were given a mix of two hundred images: half of which were photos of real humans, half of which were AI-generated. They were asked to decide whether the image depicted a “real human” or if it depicted an “AI-generated human”.

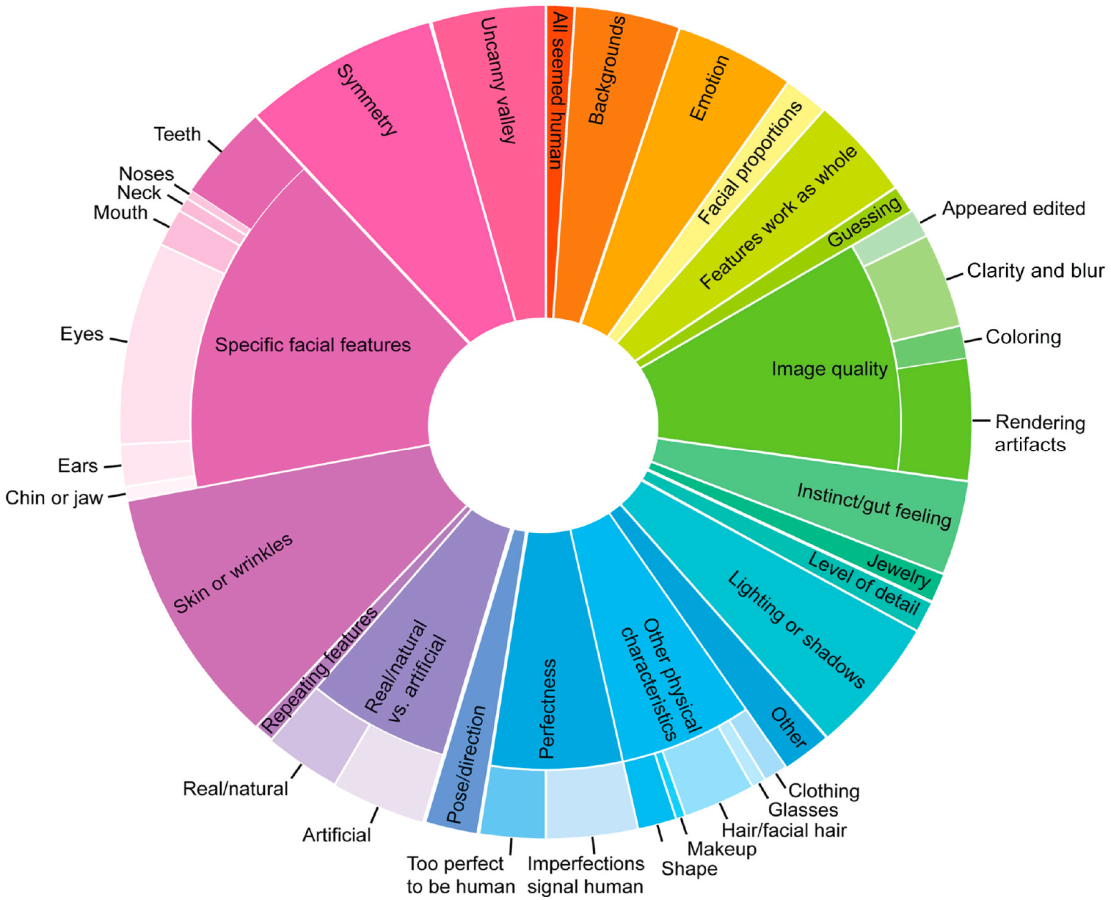

After the task, participants were then asked to rate their confidence towards the task from 0 (“not at all confident”) to 100 (“completely confident”). They were also asked to provide reasons for why they thought an image was real or AI-generated. Tricky!

“Hyperrealism” is everywhere

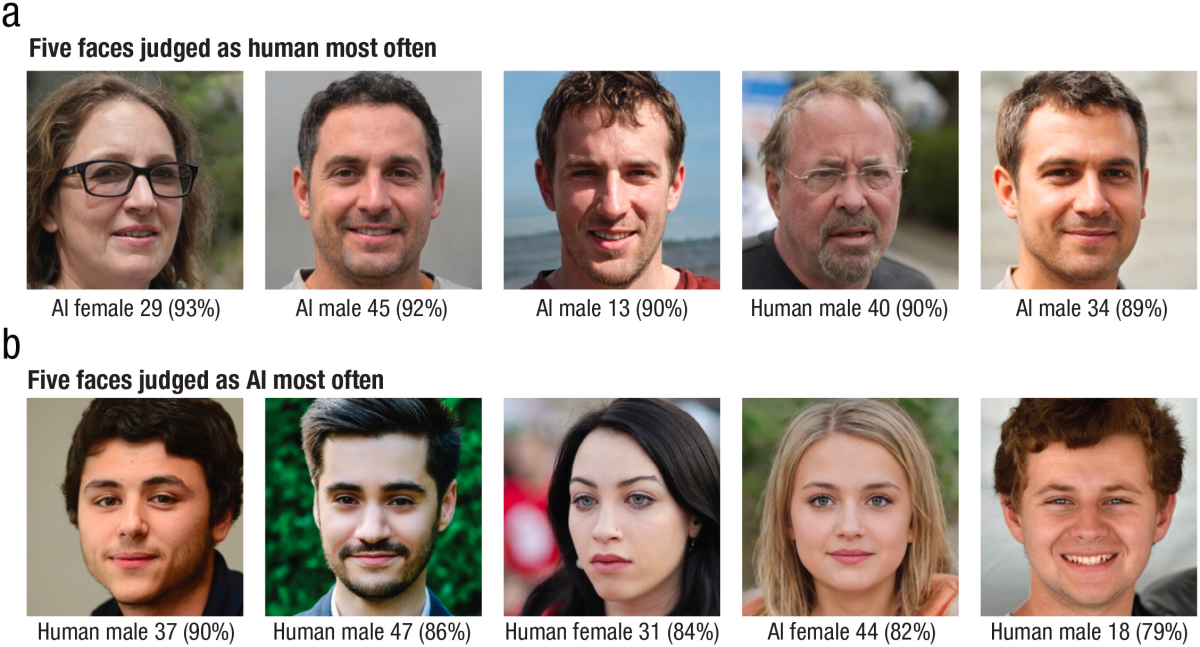

The researchers concluded that AI-generated faces of white individuals were “significantly” judged to be more ‘human’ compared to the rest. In fact, this pattern was “robust” throughout the study. The top five AI faces rated as human most often were all white, and four out of five of them were white males. The proportion of people who rated them as real humans were as high as 89 percent to 93 percent.

On the flipside, the top five human faces rated as AI most often saw a more mixed response.

Researchers also noted how “concerning” it was that some participants were overconfident in their wrong answers. An astonishing 51 percent of participants were labelled by authors as “poor performance poor insight”. This means not only did they ‘flop’ the task – they even had a false idea of their abilities and thought they did well.

“The poorest performers were the least aware of their AI detection errors,” wrote the researchers. It seems that self-awareness is nowhere to be found!

“This effect is also known as the ‘Dunning-Kruger Effect’ in psychology more broadly. In this case, we think it’s because people are confident when they feel like something is human – and because that’s the wrong judgement for AI faces, we see this false sense of confidence,” Dr. Dawel tells The Chainsaw.

AI’s known bias

AI models are well-documented to contain bias against people of colour, and this could have dangerous real-world implications when it comes to racial discrimination.

“This problem is already apparent in current AI technologies that are being used to create professional-looking headshots. When used for people of colour, the AI is altering their skin and eye colour to those of White people,” said ANU’s Dr. Amy Dawel, clinical psychologist and senior author of the paper.

However, as AI technology becomes more developed and more capable of producing photorealistic images, Dawel cautions that “the differences between AI and human faces will probably disappear soon.” This could amplify the dangers of misinformation and identity theft online.

Is it possible to introduce more racial and gender diversity into AI models? Dr. Dawel tells The Chainsaw yes. However, we “first need to better understand how the data AI is trained on impacts its outputs. This means training AI on sets that are diverse, or even have different types of biases, and testing what they create.

“It may be that training AI on diversified information might cause ‘blending’ in some cases rather than non-biased products.

“We urgently need funding for independent research to investigate these problems, and provide guidance for regulating the AI industry,” she adds.