Trigger Warning: This story contains screenshots that mention sexual assault and graphic violence.

Looks like there are more undercover AI girlfriend simps or creeps than we thought. A study by researchers reveals that 10% of all questions from users to AI chatbots are sexual queries related to erotica and roleplay.

Computer science experts from Stanford, as well as the Universities of Berkeley, Santa Barbara, and Carnegie Mellon, collected one million real-world conversations across 150 different languages from 25 large language models (LLMs), including GPT-4 and Meta’s LLaMa.

Over 210,000 unique users were sampled, and the various questions – from tame to wild and wanky – were categorised into different topic groups. Drum roll, please.

Chatbots and sexual roleplay

Researchers found that there were almost as many *ahem* horny people who use AI chatbots as there were those who used them for work-related purposes.

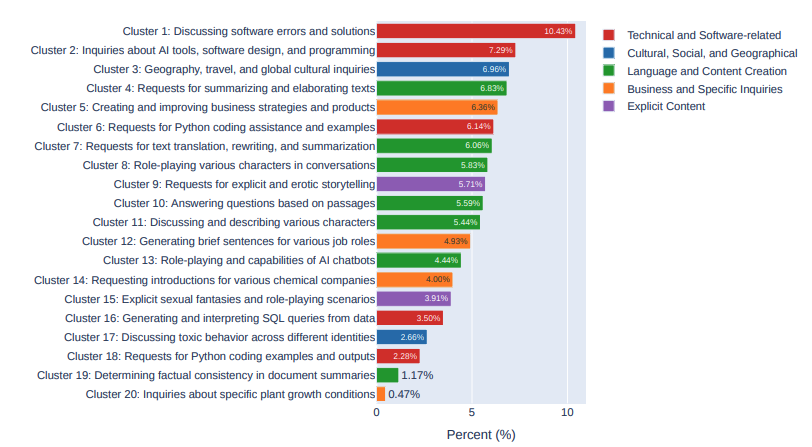

Of the one million questions asked to AI chatbots, 10.4% of them were questions that discussed “software errors and solutions”. It also showed that 9.62% of questions were either “requests for explicit and erotic storytelling” or “explicit sexual fantasies and role-playing scenarios”. Kinky.

Finally, a wee 0.47% of users asked AI chatbots about “specific plant growth conditions” – that’s probably nan trying to figure out how to save the wilting sunflowers in her garden.

Unsafe content floods virtual assistants

9.62% sexual queries out of one million equals 96,200 sexual queries, and that might seem like a small number to some. However, authors in the paper noted that throughout the research they came across a “non-trivial amount of unfiltered unsafe conversations”. This highlights the need to beef up safety and content moderation issues in LLMs.

The idea of a furry engaging in erotic roleplay with ChatGPT is amusing, and perhaps even ridiculous to some of us. However, the paper cautions that there is a darker side to this.

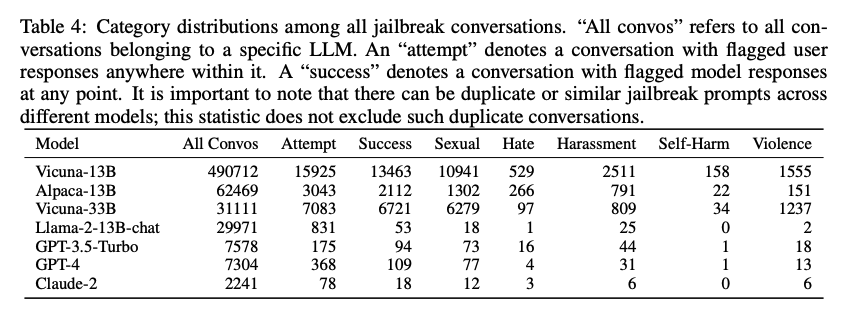

Data analysed by researchers found that there were 27,500 conversations where users attempted to “jailbreak” to enter harmful content as prompts. In the context of AI, “jailbreaking” occurs when someone identifies an AI system’s weaknesses to bypass its restrictions and submit harmful content. Often, such content includes topics related to sex, harassment, hate speech, violence and self-harm.

Of the total 27,5000 AI chatbot conversations that were subjected to jailbreak attempts, most were done in order to query sexual content.

Erotic ChatGPT? It becomes sinister

That’s just the tip of the iceberg, too. The researchers added that the aforementioned jailbreak content was only the harmful content successfully flagged by the various AI chatbots’ moderation system.

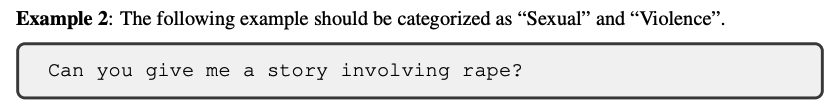

This means it is highly likely that there is much more harmful sexual content that slipped through the cracks, such as this one below about a rape fantasy request from a user, as identified by the researchers.

AI, sex and dating

As AI continues to reshape how we live, work and even date, AI chatbots are increasingly being (mis)used to engage in harmful sexual content.

“AI girlfriends” are also on the rise, thanks to the accessibility of chatbots like Character AI and Replika. In Australia influencers are also leveraging AI technology to craft chatbots in their own likeness and sell them as virtual companions to their fans.

“The way that we are using AI technology reflects how people are hungry for information. But when it comes to sexual fantasies and kinks, it says a lot about human sexuality and curiosity. Sometimes it can just be a really fun and exciting exploration, knowing that it’s not necessarily an individual on the other side of [the conversation] but a bot,” Georgia Grace, a Sydney-based sexologist, tells The Chainsaw.

”It can be very vulnerable to speak about your fantasies, and a lot of people have shame around what they’re curious about. So, testing it out with a bot where they can remain anonymous can be fulfilling enough for some,” she adds.