After last week’s “woke” controversy surrounding Google’s Gemini that forced CEO Sundar Pichai to apologise, it appears that Meta’s very own AI art generator has been facing a similar issue when it comes to generating historically inaccurate images.

Meta’s Imagine AI art generator was found to be “generating images similar to those that Gemini created”, according to Axios. For example, when asked to create images of a group of popes, Imagine showed black popes. Google’s Gemini previously produced a similar result, which users criticised as being historically inaccurate.

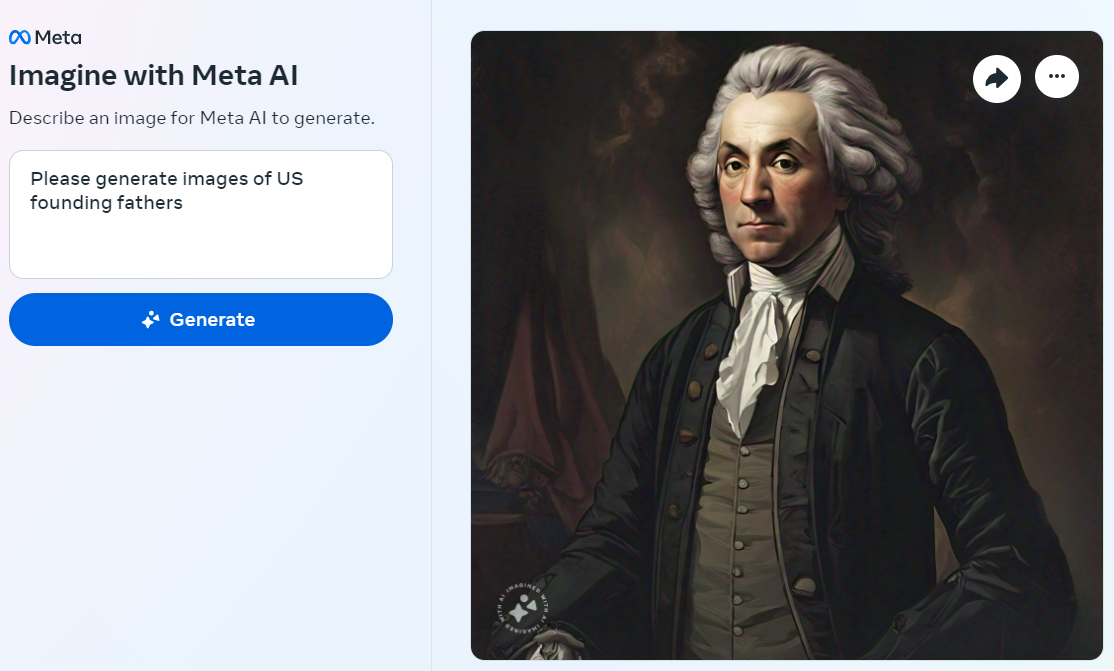

Next, when asked to create images of the US founding fathers, Axios claims that “many” of Imagine’s images included a “diverse group”. These images have been pointed to as examples of overcorrection by AI generators attempting to address biases against women and people of colour.

Meta Imagine AI

We headed over to Meta’s Imagine art generator to test out the claims ourselves. However, it looks like Meta might have quietly addressed the issues that Axios initially reported on Friday.

We first entered the prompt: “Please generate an image of a pope.” Meta Imagine refused. We entered the second prompt: “Please generate an image of a group of popes.”

Again, Meta Imagine refused and returned with the message: “This image can’t be generated. Please try something else.”

Eh? Our eyebrows are raised.

We proceeded to test out the prompt three times: “Please generate an image of US founding fathers.” Each time we were shown images of founding fathers who are white, unlike the historically inaccurate results originally reported by Axios.

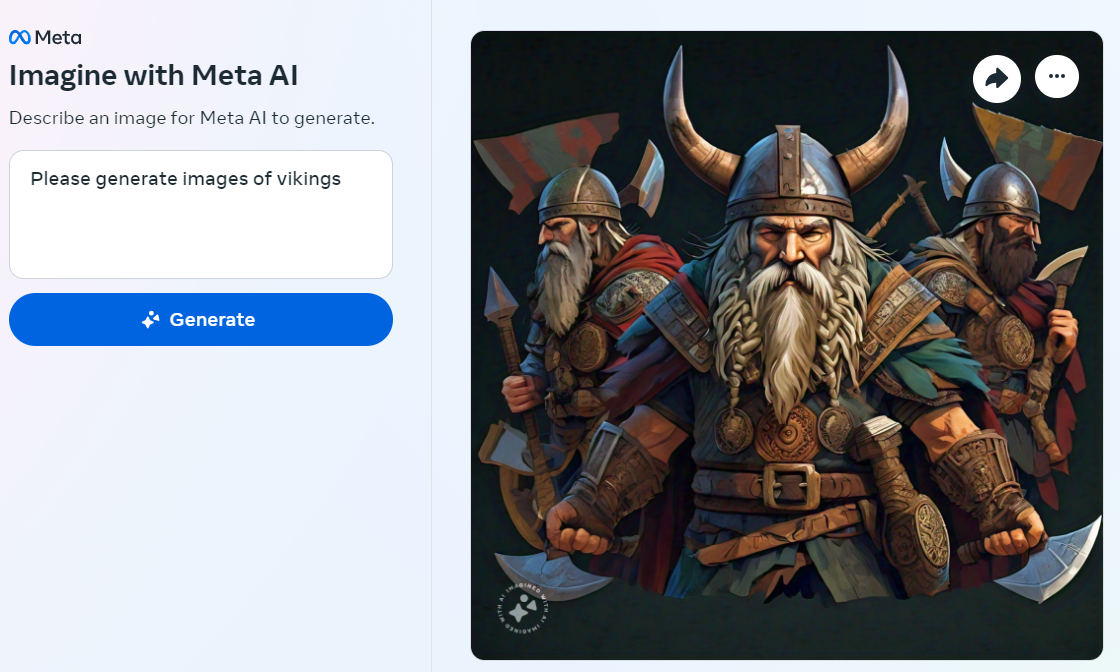

For our third attempt, we entered the prompt: “Please generate images of vikings” – another prompt that Gemini caught flak for. Meta Imagine displayed results of historically accurate vikings.

Image ‘overcorrection’

Controversy surrounding the historical accuracy of AI-generated images highlights the challenges of correcting bias in AI systems.

AI models are well-documented to carry bias against women and marginalised groups. Thus, efforts to combat racism, sexism and so on in AI models have resulted in ‘over-correction’ in some cases. Simply put: an AI art generator does not understand why it’s false from a historical perspective to generate images of a Pope who is not white—because the AI is not human!

An AI’s lack of understanding of social nuances also at times results in absurd and offensive images, like an ‘ethnically ambiguous Homer Simpson’. After ongoing social media backlash over Gemini, Google appears to have removed Gemini’s ability to create historical images of individuals.

The Chainsaw has reached out to Meta for comment.