Fraudsters are growing more sophisticated every day. In their latest scam, they are using deepfake AI generators to create hyper-realistic identity documents to pretend to be you and take your money. So what are these scams and will the deepfake fraud affect you?

Synthetic ID scams

Synthetic ID scams happen when fraudsters combine real and fake information to create a new identity.

When a person opens a bank account online, it is a standard process now to go through an ID verification process remotely, without going into the bank.

You may be required to take a selfie holding an ID document next to your face, or record a video of yourself reading a prompt out loud. This is to prove that you are who you claim to be.

Criminals now have the ability to get past this digital verification process, using face-morphing deepfake technology, powered by generative AI. This could mean someone opens a bank account as you, and conducts criminal acts under your name, without your knowledge.

Fraudsters also use synthetic IDs to trick lending businesses. The criminals meticulously build fake credit profiles for these identities from scratch, and then submit loan applications.

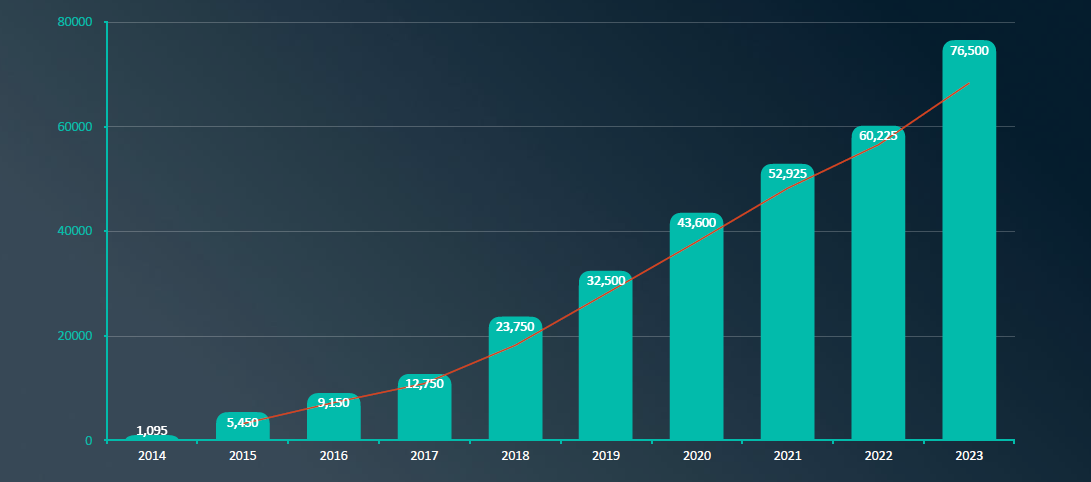

The rise in synthetic ID crimes. Credit: IDVerse

What is the defence plan?

Paul Warren-Tape, the Senior Vice President of Risk and Compliance at IDVerse, says criminals are using free AI tools online, to create what looks and feels like a real identity document.

“They enter fictitious information, or stolen information from someone’s letterbox, and create what passes as a real identity document,” Warren-Tape says.

“When most companies do remote verification, they will ask for a photo of an identity document to be held up next to your face on a call, live, in real time. But criminals are using deepfake documents at this stage of the process.”

Three of the four big banks are using IDVerse’s AI-run technology to pick up these fakes at this stage of the process.

“It is about understanding what the fraudsters are capable of, and training the AI algorithm to detect and prevent fraud”, says Warren-Tape. “Our AIs have been trained to precisely identify the micro movements and temporal vibrations of synthetically-developed (in other words, fake) deepfake images and videos for their human likeness.”

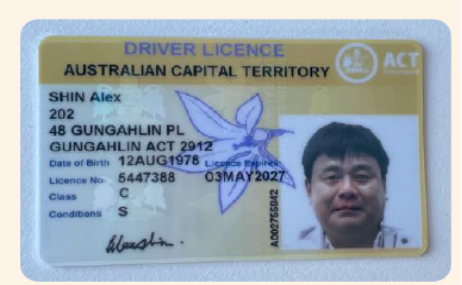

An example of a synthetic ID document, or deepfake fraud, as supplied by ID Verse.

Deepfake fraud: How it could affect you

Warren-Tape says criminals are also using this technology in New South Wales and Queensland to obtain a digital drivers licence in other people’s names. They then go on to defraud funds using this synthetic ID.

“In New South Wales there’s a known security flaw where I can get a real licence and change all my information to have your name, your address and your photo on it. You would be none the wiser. If someone scans this synthetic licence, it shows up as a real licence. But it’s your information and your photo. Then I can use that to open a bank account or do other nefarious things.”

The criminals also use deepfake IDs to hire cars, then drive off and never return them. They also use synthetic IDs to steal money and then use it to gamble on internet casinos.

“After the fraudsters manage to get access to your bank accounts, they will get a line of credit. They then go and spend it or buy gift cards, which they can sell for money.”

Warren-Tape also says synthetic IDs have been used to create fake myGov accounts and have even managed to “steal money from the government via fake tax rebates. There is a huge amount of fraudulent accounts, which didn’t actually tie back to a real person in society.”

Fighting deepfake fraud with AI

Warren-Tape says their AI is being used by institutions such as banks to detect synthetic data.

“Is it a real physical document? Does it have depth perception? Is someone really holding it? Are the physical security features on those documents correct? Is the font correct? And is it not an obviously-tampered-with document? Is it a photocopy? Does it have a bit of paper over the front? We use computer vision with natural language processing, a neural network so it acts like a human brain. We teach it like we would a new fraud assessor who’s at customs to pick up fraudulent documents.”

Warren-Tape admits his system is never going to be 100 percent foolproof. “We are accurate 99 percent of the time in picking up fraudulent activity. There’s still too much identity theft and fraud out there which not only distorts the Australian markets but also causes harm. We know none of that money is not being used for good.

“There is also the social impact on people who are the victims of identity theft. It can take up to six months to reclaim your identity and that’s not only financial, but it’s also then the mental stress and the heartache that comes with that as well.”

Feature image: Tom Cruise impersonator Miles Fisher Vs the real actor. Credit