In a world where we’re constantly bombarded with information, the promise of AI-powered tools that can cut through the noise is alluring. But as a study released today reveals, the road to an AI-powered future is paved with concerns around misinformation and trust.

The Adobe Future of Trust Study, which surveyed over 1,000 consumers in Australia and New Zealand, paints a picture of a public that is both optimistic and wary of generative AI.

On the bright side, 66% of respondents believe that the technology will make it easier to find information, and 55% think it will boost their productivity. For the average person, this could mean less time spent sifting through endless documents and more time focused on the tasks that matter. Less time with boring documents more time being creative, basically.

But the study also exposes a deep undercurrent of mistrust. Nearly half of those surveyed worry generative AI will create inaccurate information that will be taken as fact. And 78% believe that misinformation and deepfakes will have an impact on upcoming local and foreign elections.

This is where Adobe’s new AI assistants come in. The Adobe Acrobat AI Assistant promises to make document management a breeze by allowing users to converse with their files, generate content and format text with simple commands. Adobe says this will allow users to summarise long reports in seconds.

Obviously, this can already be done with myriad tools on the market today, but that would involve copying content of a document into a model like Claude or ChatGPT, which is tedious but also creates room for error.

A tool that lives natively in Adobe will be able to source all information provided in hypothetical summaries. If the summary includes a certain statistic, it will be able to take you to that specific part of the document.

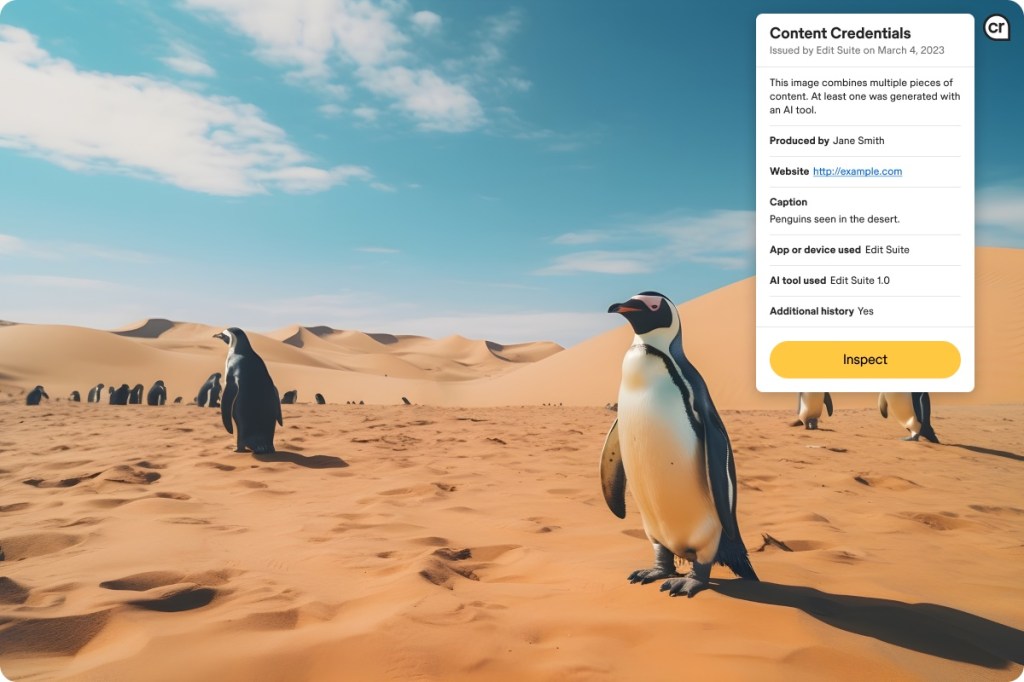

Adobe is also touting the new feature Content Credentials as a solution to the misinformation problem. Essentially a nutrition label for digital content, Content Credentials use cryptography and watermarking to provide a tamper-proof record of who created a piece of content and how it’s been modified. It’s a promising step towards transparency, but it remains to be seen whether it will be enough to quell concerns around AI-generated content.

The stakes are high, not just for Adobe, but for society as a whole. Nearly nine in 10 (87%) people surveyed believe government and tech companies need to work together to protect elections from the threat of misinformation. And 80% think that candidates should be prohibited from using generative AI in their campaigns altogether.

Technology is advancing at breakneck speed, and we may soon be living in a world where all images and videos are met with immediate scepticism (if we aren’t there already). Is this a real video of Donald Trump speaking? Can I trust that this is actually a photo of Joe Biden? Every day it becomes more difficult to tell what is real. Slapping Adobe’s Content Credentials on images is a good step towards combating this justifiable information paranoia.

It won’t fix the wave of misinformation that’s sure to continue to flood the internet, especially in 2024 — a year in which billions of people in dozens of countries are set to vote in their national elections.

Still, it’s a decent start.