Months ago, a group of computer scientists from the University of Chicago (“UChicago”) worked with human artists to launch a tool to protect creatives from having their artwork stolen by AI art tools. That tool is called Glaze.

The same UChicago team behind Glaze have now launched a second tool called Nightshade, which is able to ‘‘poison’’ AI art generators like Midjourney and DALL-E. Here’s how it works, and why the tool is important.

What is Nightshade?

Nightshade is a “data poisoning” tool that lets artists add invisible alterations in their artwork that would corrupt or poison AI art generators that scrape from said artwork.

The tool was developed by the same team of computer scientists from UChicago. The project is led by Ben Zhao, who is also the chief scientist behind Glaze. In an interview with MIT Technology Review Professor Zhao says that the hope is to “tip the power balance back from AI companies towards artists by creating a powerful deterrent against disrespecting artists’ copyright and intellectual property”.

Exploiting AI art models’ weakness

In a research paper, the Nightshade team states that the “data poisoning” tool actually exploits a security vulnerability that lies within generative AI models. The vulnerability arises from what the paper calls “concept sparsity”, which is when AI models contain “a matrix of numbers that [include] many zeros or values that will not significantly impact a calculation”.

Researchers say that this sparsity is “an intrinsic characteristic” in generative AI art tools like Midjourney. Text-to-image AI models including Midjourney and DALL-E are also more susceptible to data poisoning attacks – this makes Nightshade a whole lot more powerful.

Nightshade: How does it work?

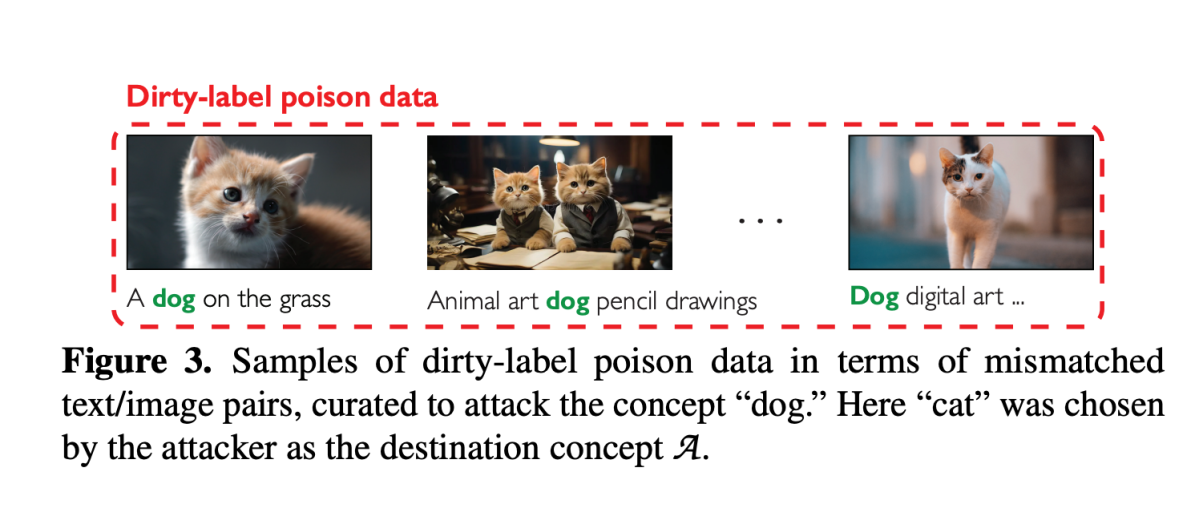

So, how does it work? An artist first uploads their artwork onto Glaze, and they can choose to use Nightshade. Once they do, Nightshade will add invisible changes to the artwork – injecting the poison into the art, if you will. If a generative AI model happens to scrape a Nightshaded image, the poison will creep its way into that model and slowly corrupt it.

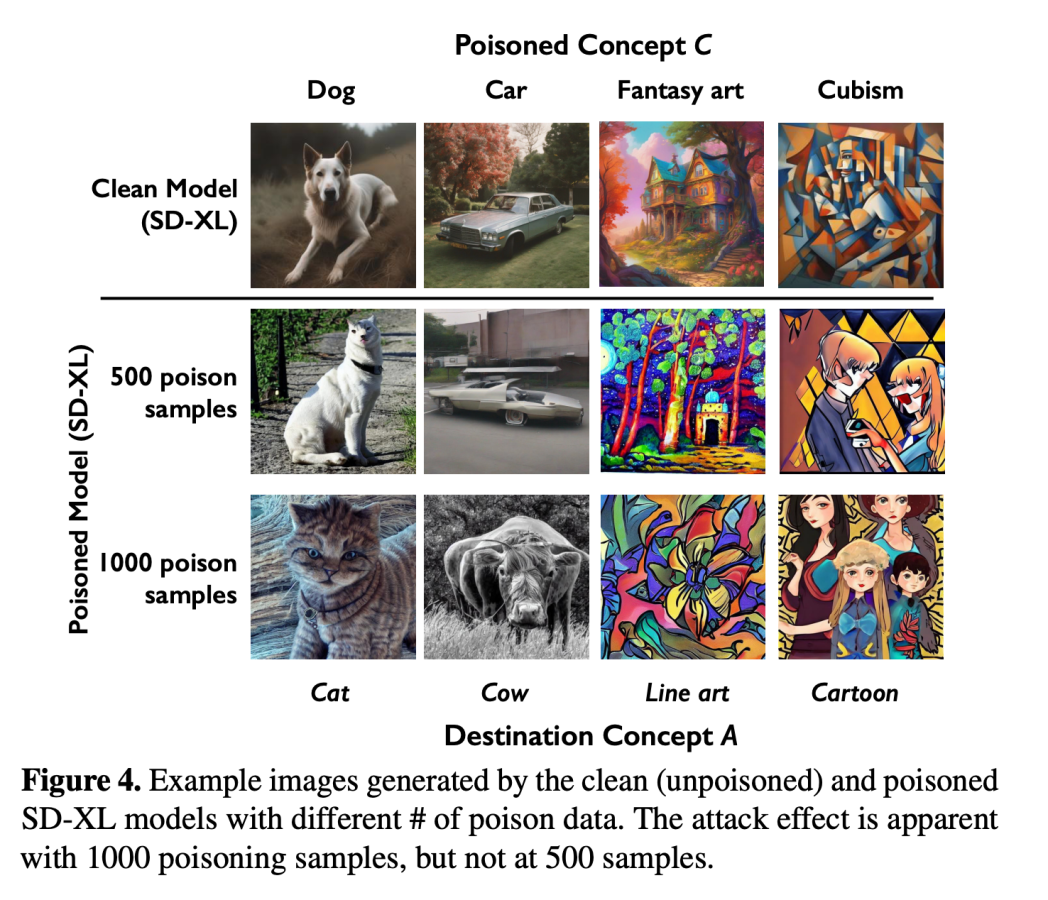

The Nightshade team members tested the tool against Stable Diffusion, a popular AI art generator. They fed Stable Diffusion a large volume of poisoned artwork and asked it to generate images of dogs. Per MIT Technology Review, researchers found that after being fed 50 poisoned samples, the AI-generated dogs started looking funky.

After feeding it 500–1,000 poisoned samples, Nightshade was able to successfully corrupt Stable Diffusion.

In fact, Nightshade’s corruption is able to degrade AI art generators to the extent that a dog becomes a cat, a car becomes a cow, and avant-garde Cubism becomes 2D cartoons.

In addition, UChicago’s scientists say that not only would Nightshade be able to poison AI art generators, it also breaks them to the point where future iterations of AI models (e.g. Midjourney, DALL-E) would also be “destabilised”. This effectively removes their ability to generate “meaningful images”, the paper says.

Fighting fire with fire

As punters online become increasingly adroit at art theft, and artists continue to struggle with AI-generated art and related scams, Nightshade is welcome.

In a long thread on X, the UChicago team said that the intention is to help artists “make use of every mechanism” to protect their intellectual property.

“If you’re a movie studio, gaming company, art gallery, or [independent] artist, the only thing you can do to avoid being sucked into a model is 1) opt-out lists, and 2) do-not-scrape directives … [however], none of these mechanisms are enforceable, or even verifiable,” the team wrote.

As exciting as Nightshade sounds, at the time of writing the tool wasn’t publicly available. The Nightshade team said it was currently working on how to build and release the tool. The research paper, however, is freely available.