Two scientists have written a research paper about diabetes from scratch with help from ChatGPT – and managed to produce a complete paper in an hour.

Roy Kishony and his student Tal Ifargan from the Technion – Israel Institute of Technology wanted to examine the advantages and disadvantages of OpenAI’s powerful chatbot when it comes to assisting researchers in academia.

“We need a discussion on how we can get the benefits with less of the downsides,” Kishony tells Nature.

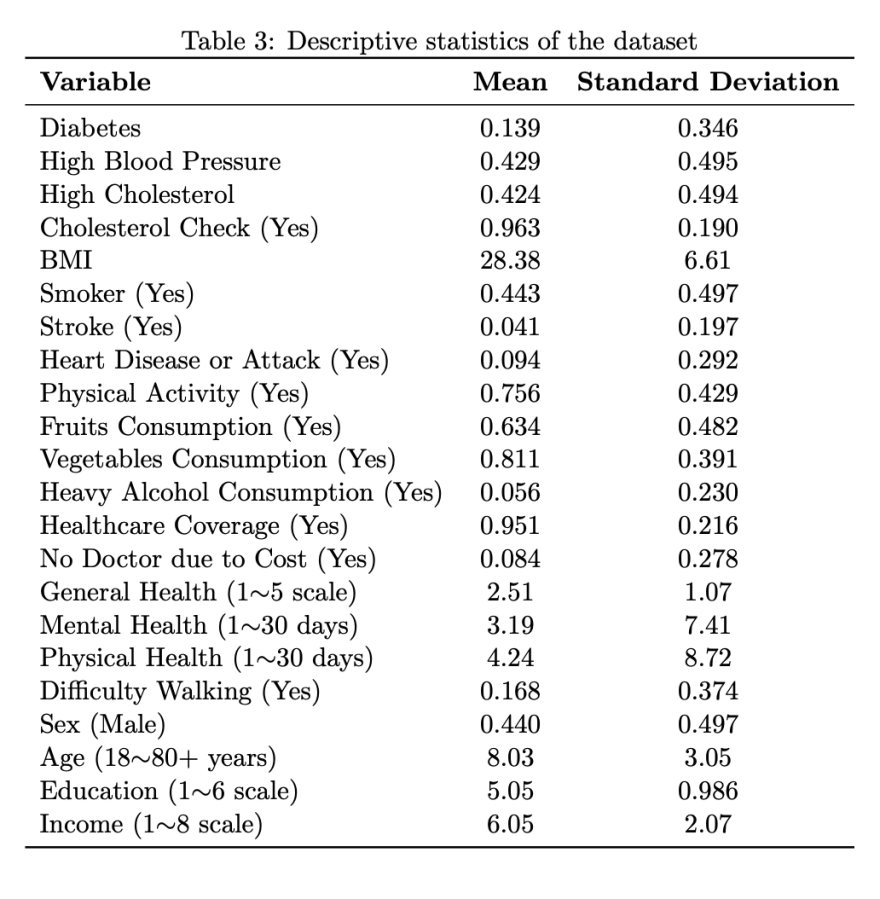

The scientific paper authored by ChatGPT was titled ‘The Impact of Fruit and Vegetable Consumption and Physical Activity on Diabetes Risk among Adults’. According to Nature, Kishony and Ifargan pulled publicly available data about Americans’ diabetes status from the US Centres for Disease Control and Prevention (CDC).

Next, both researchers asked ChatGPT to perform a range of tasks to complete the paper. They included drawing up a goal for the study, writing code, and performing a data analysis. In the end, ChatGPT produced a 19-page paper about diabetes.

Pretty good, right? Let’s see.

Structure? Check. Fluency? Check.

According to Kishony and Ifargan, the paper, overall, was “fluent, insightful, and presented in the structure of a scientific paper.” The paper’s abstract reads:

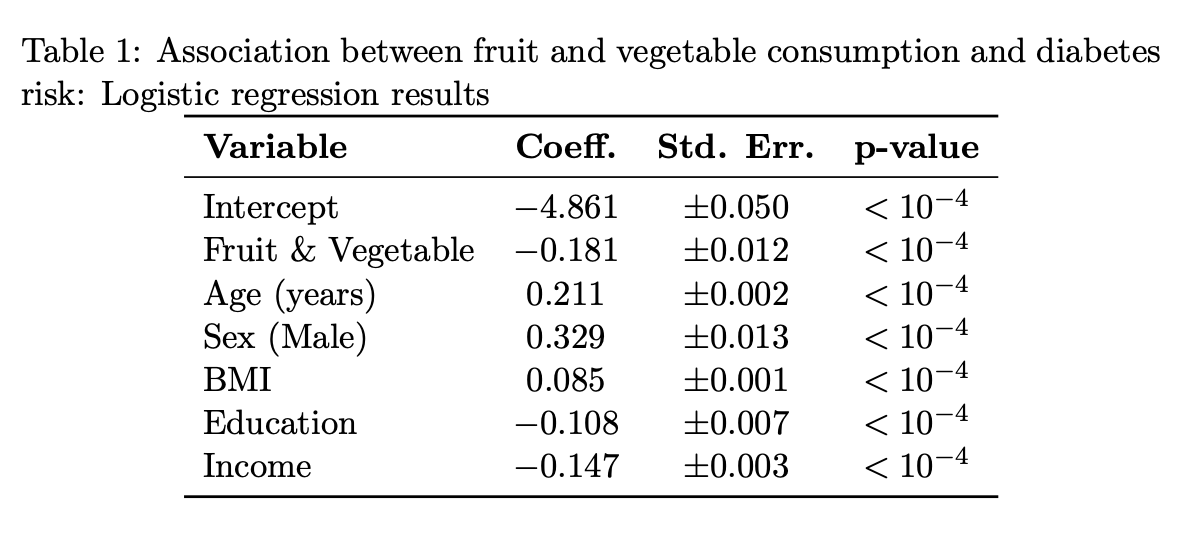

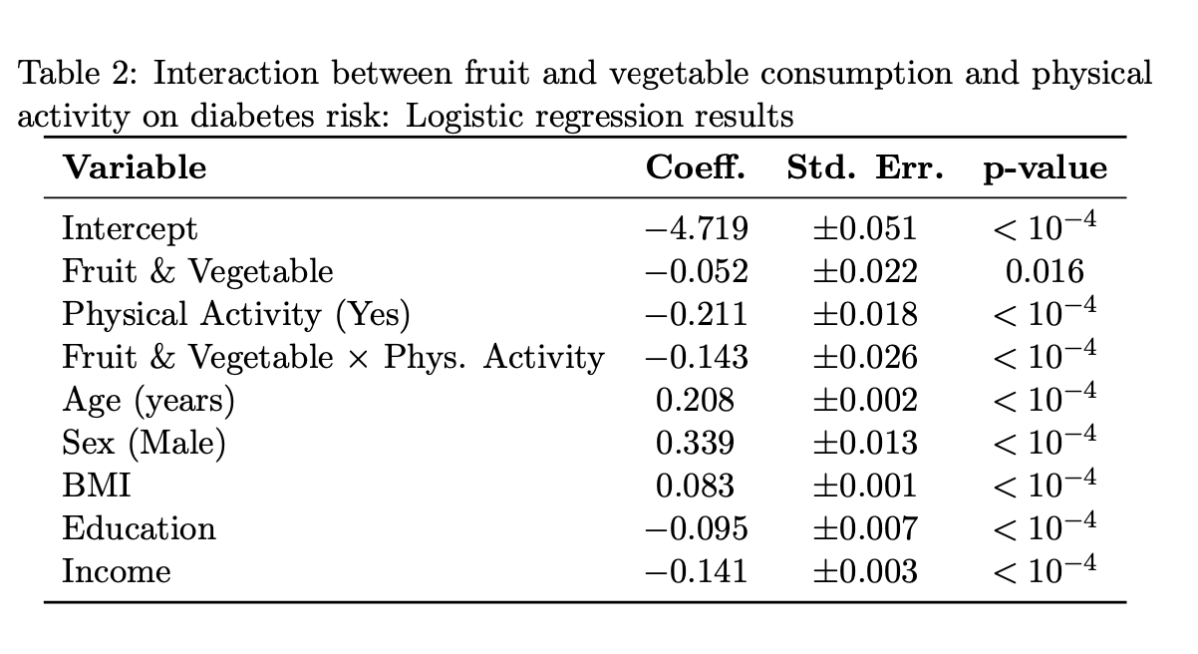

… which reads coherent and sounds professional. There are tables laying out some fairly convincing statistics, too. At the outset, everything seems pretty well-thought out.

ChatGPT: Knowledge? Nope.

However, both scientists pointed out that at the first attempt, ChatGPT actually “generated code that was riddled with errors.” They had to provide feedback to the chatbot and ask it to correct itself to arrive at the right answers.

The main issue, noted the pair of scientists, is that ChatGPT had the tendency to hallucinate. In other words, it made sh*t up. For the research, the chatbot “generated fake citations and inaccurate information,” states Nature.

Reports of ChatGPT’s hallucinations are not new. In fact, it remains a common behaviour across AI chatbots including Microsoft’s Bing and Google’s Bard. Two notable figures in Australia and the US have stated that they plan to sue OpenAI for defamation – all due to incorrect information and/or scenarios conjured up by ChatGPT.

In conclusion, with ChatGPT, there remains “many hurdles to overcome before the tool can be truly helpful,” notes Nature. Professionals like lawyers have landed themselves in trouble, too, after the chatbot cited non-existent cases and confidently passed them off as real. So, for now, it is best not to treat everything ChatGPT spits out as gospel.