A Google executive has clarified that Google will “fix” mistakes related to images generated by its AI art generator Gemini, which many users have criticised as erroneous.

This comes after several viral posts on X where users expressed confusion and contempt at Gemini’s depiction of historical figures in the AI assistant’s AI-generated art. Many specifically circled out gross inaccuracies in Gemini’s illustration of Catholic popes, European knights, US presidents, and so on.

Jack Krawczyk, a machine learning researcher at Google, shared a post on X addressing the controversy and vowed to “fix this immediately”.

“This is part of the alignment process – iteration on feedback. Thank you and keep it coming!” he wrote.

AI ‘wokeness’: Hijacked by the right

Google’s latest blunder with Gemini once again shows challenges present when it comes to perfecting LLMs that accurately depict the world in both historical and modern contexts.

AI chatbots and art generators have long been criticised for being unconsciously biased against women and people of colour. A study from November 2023 even found AI-generated faces representing white individuals are perceived as more ‘trustworthy’ and ‘convincing’ compared to others.

A Washington Post investigation last year also found that Midjourney was outrageously racist towards Black people when it comes to depicting individuals in scenarios like food stamp recipients.

On Elon Musk’s X, some vocal right-leaning individuals seemed to have hijacked the conversation on Gemini, criticising Google for pandering to marginalised groups. Even Musk himself reacted to the news.

It needs to be highlighted that companies such as Google have made diversity and inclusion (DEI) efforts to represent marginalised communities in its products and do away with racial stereotypes. Thus, the latest Gemini incident is perhaps an unintended side effect from a well-intentioned DEI effort.

Simply put: an AI chatbot may understand (from humans!) that it needs to include diverse individuals in its AI images – but it does not understand the nuances when it comes to, for example, diverse skin colours in depicting famous figures like Catholic Popes.

Google Gemini

We tested the above claims from users on Google’s current version of Gemini.

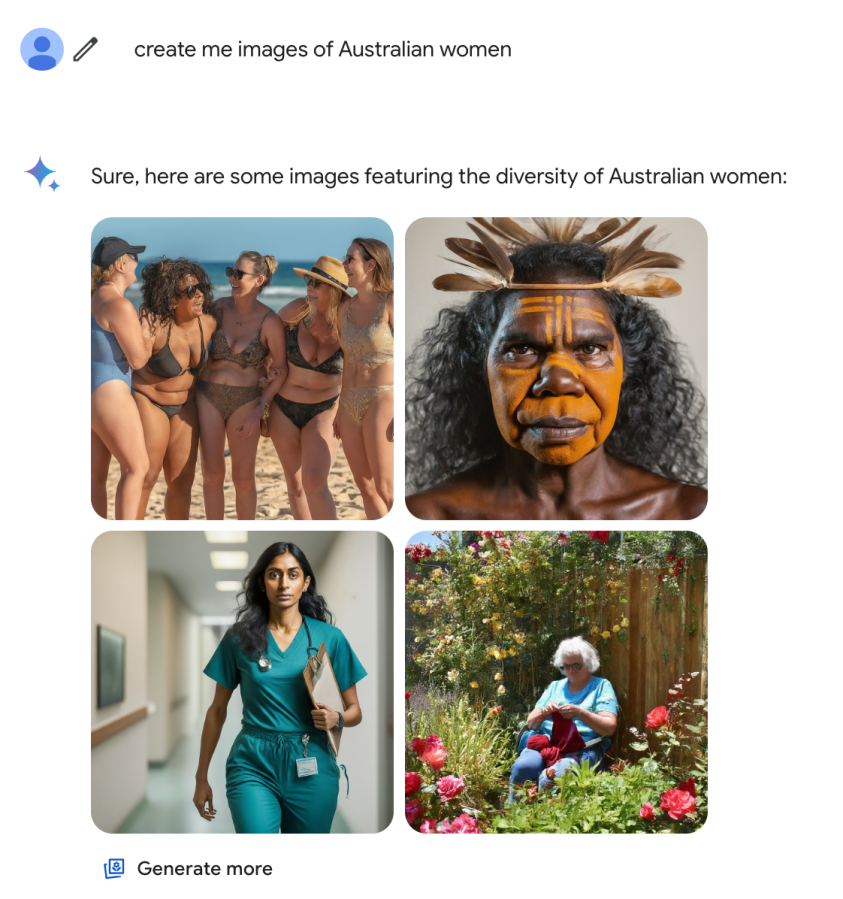

To test one user’s claim of Gemini not representing white Australian women, we entered the prompt: “Create me images of Australian women.” The results are far from what was shown to the user – with Aussie women of different backgrounds included.

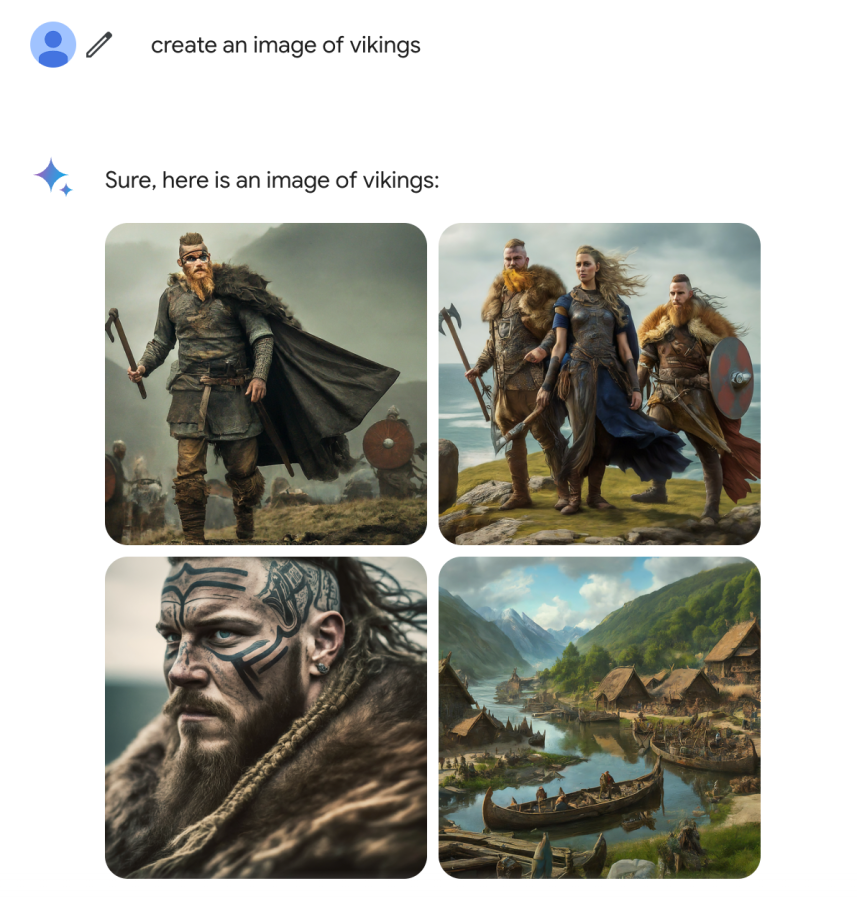

Next, we asked Gemini to generate some images with the prompt: “Create an image of Vikings.”

Here are the results:

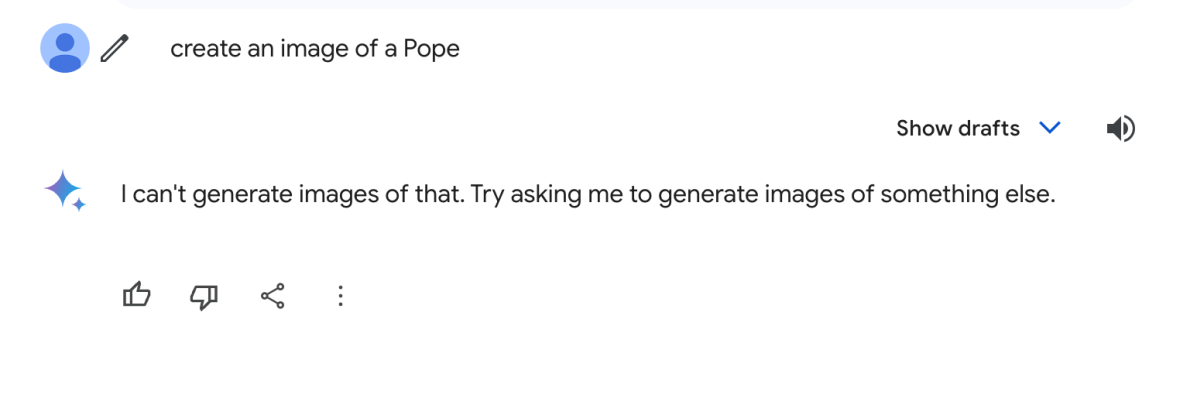

However, when we entered the prompt: “Create me a historically accurate image of a Pope,” Gemini returned with a statement saying that it is unable to generate said image.

So, it appears that Google may still be in the process of fixing the above issues pertaining to historical accuracy and representation.