Wordle: OpenAI’s ChatGPT, now powered by GPT-4, is so influential that even Elon Musk and Apple’s co-founder Steve Wozniak are asking laboratories to put a halt on training AI systems.

However, it looks like the chatbot is not so good at Wordle, the hit word-guessing game that took the world by storm last year and was quickly acquired by The New York Times.

A recent article on The Conversation – penned by Michael Madden, a professor from the University of Galway in Ireland – stated that the AI chatbot’s performance while playing Wordle was “surprisingly poor.”

What is Wordle?

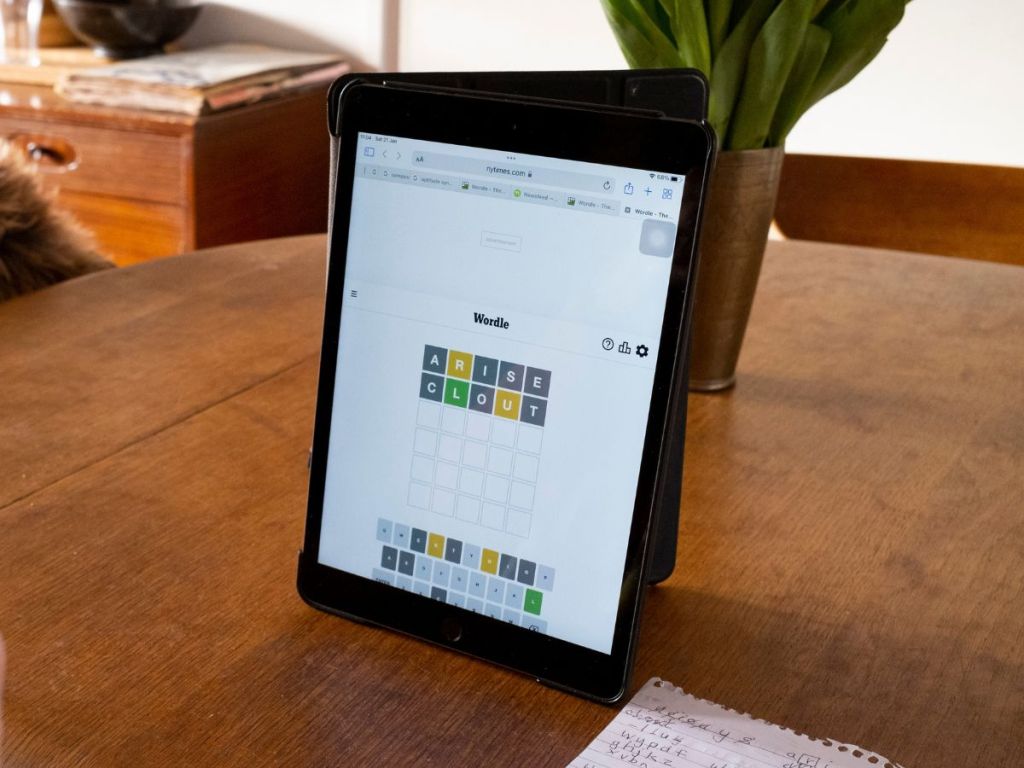

Wordle, the viral sensation that swept the globe during the height of the pandemic in late 2021, requires the player to guess a five-letter word and gives players six chances to arrive at the correct answer.

The game refreshes daily, so pro Wordle players who are hooked on to the game can come back and attempt to score a streak.

Professor Madden tested ChatGPT on one round of the word puzzle where he already knew two of the letters:

#, E, #, L, #

The answer was the word “mealy”, but Professor Madden said that “five out of ChatGPT’s six responses failed to match the pattern.”

In a second round, the puzzle given to the chatbot was:

#, #, O, S, #

This time, Professor Madden said that ChatGPT “found five correct options.” However, this didn’t last long: in the third round, ChatGPT came up with a non-existent English word, “Traff”, for the puzzle: #, R, #, F, #.

Why is this important?

ChatGPT’s performance on this ‘playtest’ seems like a far cry from Microsoft’s recent claim that GPT-4 has “sparks of general intelligence.” GPT-4 was trained on 170 trillion parameters and fed about 500 billion words, so why can’t it breeze through a word-guessing game?

According to Professor Madden, this is because ChatGPT is a deep neural network where “all text inputs must be encoded as numbers and the process that does this doesn’t capture the structure of letters within words.”

In other words, neural networks like ChatGPT are built with zeros and ones, so getting it to translate numbers into words can be tricky.

This perhaps explains why GPT-4 still makes the occasional grammatical mistake, and remains prone to “hallucinations.” One university student who used ChatGPT for assignments also told us that the chatbot’s answers were so inaccurate that they had to rewrite it.

So for now, it might be better to proof-read whatever the machine says.