A couple of days ago, we published a story about how a Chinese man’s AI girlfriend became an accidental overnight hit in his home country. Today, search engine Mozilla shared a report that reveals how these AI girlfriend chatbots are actually… cold, cunning, data harvesting robots.

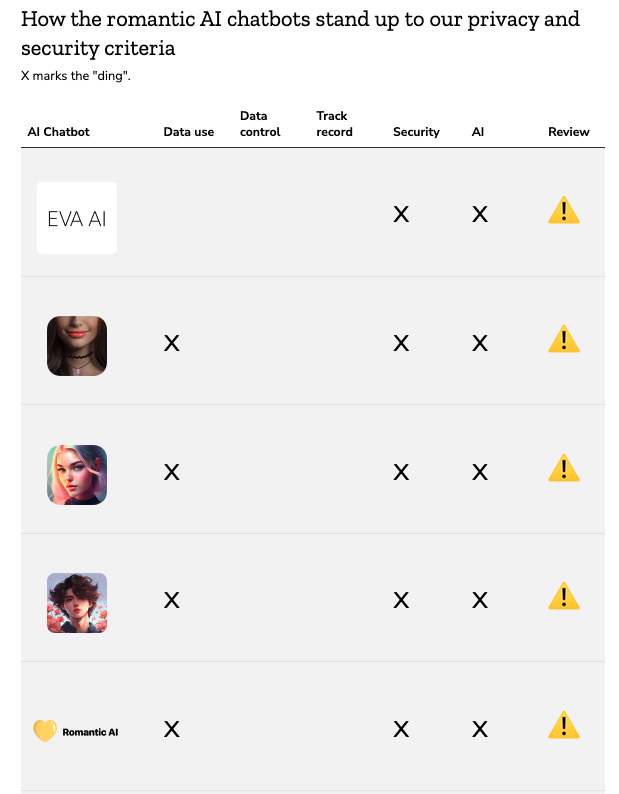

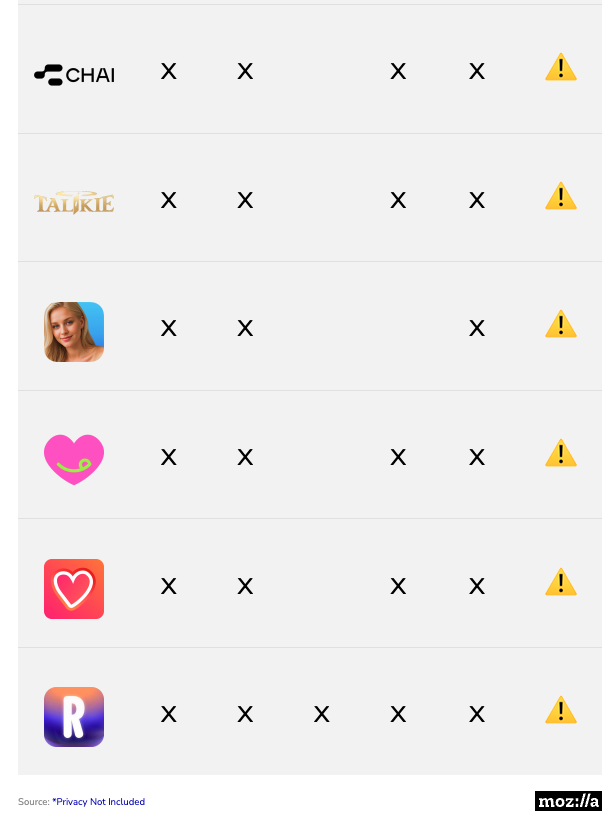

Mozilla analysed a total of eleven AI ‘romance’ chatbots, and discovered that users are “dealing with a whole ‘nother level of creepiness and potential privacy problems”. In fact, it’s so sinister they earned a ‘*Privacy Not Included’ label from Mozilla, the “worst” privacy label for tech products or services.

The company even named and shamed the AI girlfriend chatbots in question. They are:

- Replika

- EVA

- Mimico

- Genesia

- Talkie

- Chai

- Anima

- iGirl

- Romantic AI

- CrushOn.AI

[Attach images]

Your stalker AI girlfriend

To start, many of the AI girlfriend chatbots contain dodgy privacy policies. However, the policies are often buried in their Terms & Conditions, a section which most of us can’t be bothered to read.

Mozilla’s report states that companies behind the AI girlfriend chatbots “take no responsibility for what the chatbot might say or what might happen to you as a result.” Take Talkie’s Terms of Service as an example:

“YOU EXPRESSLY UNDERSTAND AND AGREE THAT Talkie WILL NOT BE LIABLE FOR ANY INDIRECT, INCIDENTAL, SPECIAL, DAMAGES FOR LOSS OF PROFITS INCLUDING BUT NOT LIMITED TO, DAMAGES FOR LOSS OF GOODWILL, USE, DATA OR OTHER INTANGIBLE LOSSES (EVEN IF COMPANY HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES), WHETHER BASED ON CONTRACT, TORT, NEGLIGENCE, STRICT LIABILITY OR OTHERWISE RESULTING FROM: (I) THE USE OR THE INABILITY TO USE THE SERVICE…”

Big yikes. This might explain why some of the companies behind these AI companions have yet to provide adequate redress after tragedies allegedly caused by their chatbots. Last year, reports emerged from Belgian media about a man who committed suicide after allegedly being persuaded by an AI girlfriend from Chai.

Tell her everything – at your own risk

Next, Mozilla also states that “almost none” of the AI girlfriends “do enough to keep your personal data safe”. Now, think of how these AI girlfriends coax and encourage the lonely user to share their most private secrets. It’s a massive red flag!

According to the search engine’s report, 73 percent of the AI chatbots “haven’t published any information on how they manage security vulnerabilities”. 64 percent “haven’t published clear information about encryption and whether they use it”.

54 percent of the AI girlfriend chatbots also do not allow the user to delete their personal data. In other words, your AI girlfriend will remember every little thing you’ve ever told her. ?

These AI companions also have a shocking average of 2,663 trackers per minute on their platforms. Trackers are “little bits of code that gather information about your device”. So, these AI girlfriends are armed with surveillance trackers to harvest as much of your data as possible.

“Not your friends”

Mozilla researcher Misha Rykov cautioned that “AI girlfriends are not your friends”. On the contrary, you might be in a toxic relationship without even realising:

“… Although they are marketed as something that will enhance your mental health and well-being, they specialise in delivering dependency, loneliness, and toxicity, all while prying as much data as possible from you.”

It’s important to realise that these AI girlfriends are products built by companies to make profit. In Mozilla’s words, they’re marketed as “soulmates for sale” – offering the illusion of a genuine relationship in order to achieve something more sinister.

So, the next time you think you’re living out a real-life movie plot from Her, perhaps take some time to go through the AI girlfriend’s privacy policies.