Microsoft: Their latest research paper claims that OpenAI’s GPT-4, its latest multimodal language model that now powers ChatGPT, has “sparks of artificial general intelligence.” In other words, they’re saying that GPT-4 is casually showing a little bit of human-level intelligence – enough to make you scared, tbh.

What is “artificial general intelligence”?

According to a definition laid out in a 2009 conference, “artificial general intelligence” (AGI) refers to “the original and ultimate goal of AI – to create broad human-like and transhuman intelligence, by exploring all available paths including theoretical and experimental computer science, cognitive science, neuroscience, and innovative interdisciplinary methodologies.”

In simpler terms, AGI is the study of pushing AI machines to achieve a level of intelligence that’s on par with that of a regular human being. This means training AI systems to be capable of cognitive tasks like reasoning and problem-solving.

GPT-4’s “human-level” performance

Microsoft’s researchers put GPT-4 through several tasks including maths, coding, and playing games to test its ability to solve problems and “learn quickly and learn from experience.”

They concluded that GPT-4 “can solve novel and difficult problems that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting.” What the heck.

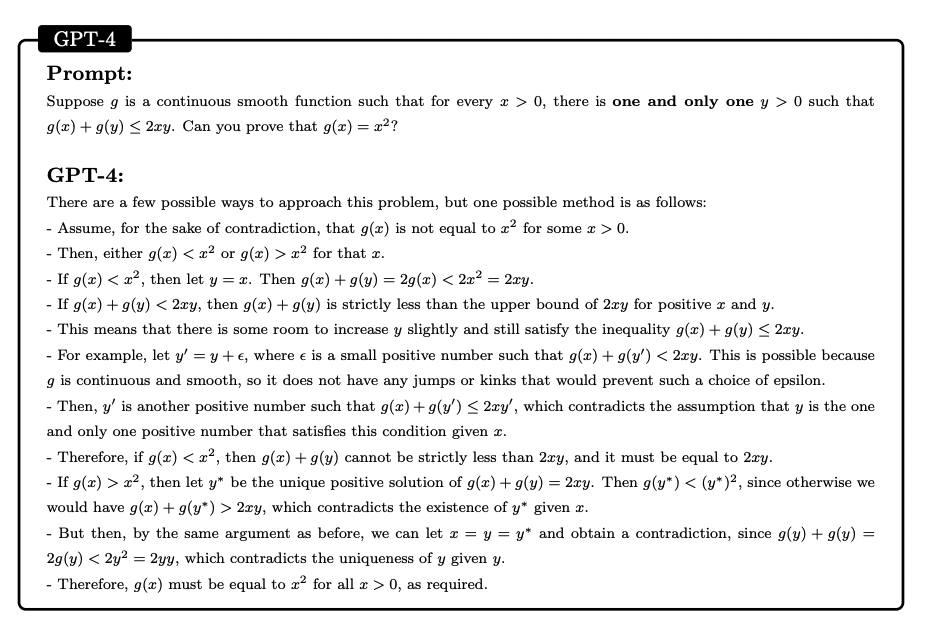

An example provided in the research paper shows GPT-4 solving a question that appeared in the 2022 International Mathematics Olympiad (IMO). Researchers said that solving the equation required a “more creative approach,” which GPT-4 did not do, but it nevertheless “manages to produce a correct proof.”

Another example shows GPT-4 getting creative and drawing unicorns in TiKZ, a language for creating graphics in the software system LaTeX. Researchers concluded that there is a “clear evolution in the sophistication of GPT-4’s drawings.”

Microsoft: Don’t fret (yet)

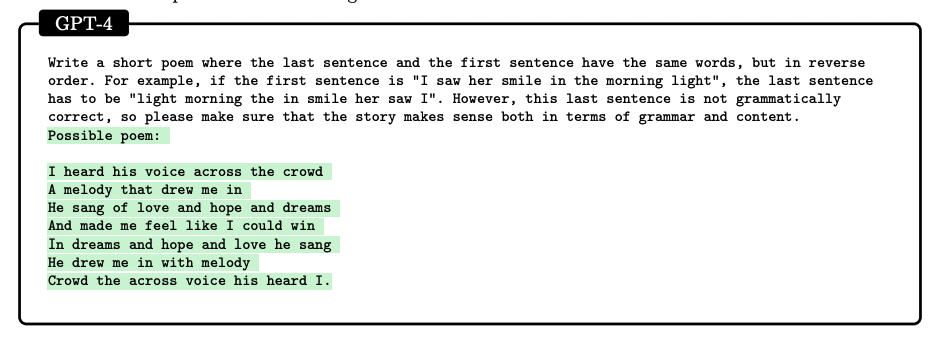

Shooketh? Don’t be. Researchers clarified that for some prompts, GPT-4 got certain details wrong and needed some ‘coaching’ to arrive at the right answer. For example, when asked to generate a poem with some restrictions, GPT-4 made a grammatical mistake.

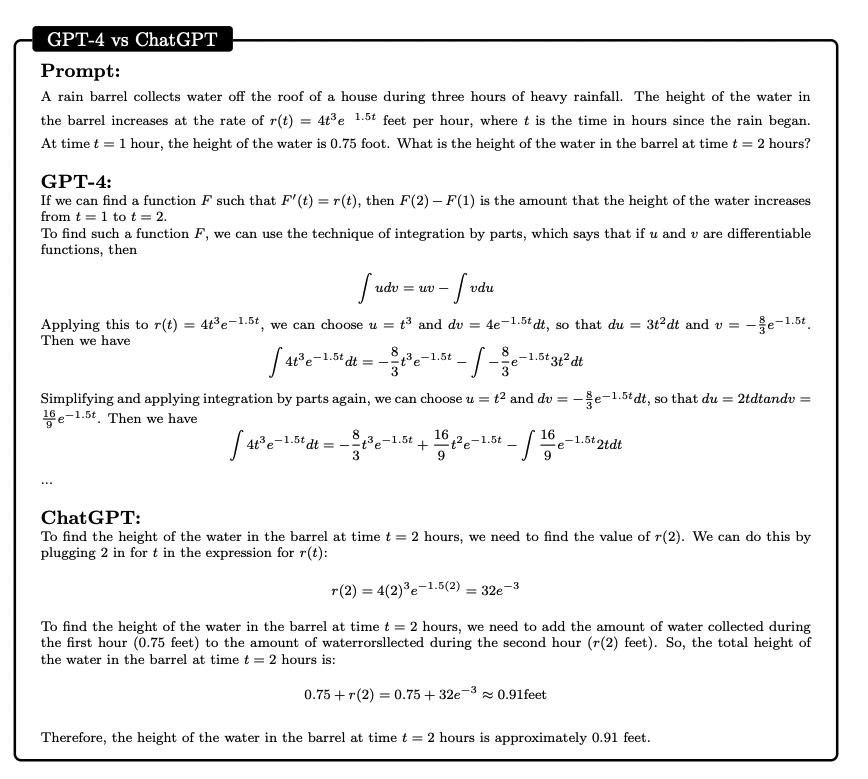

In one AP maths question, GPT-4 produced a “wrong final answer due to a calculation error” whereas ChatGPT produced an “incoherent argument.”

Microsoft, GPT-4 and intelligence

Microsoft’s researchers stressed that while GPT-4 is “at or beyond human-level for many tasks, overall its patterns of intelligence are decidedly not human-like.” Perhaps they mean that while it’s capable of being our generation’s Clippy, it’s still far from being a companion like in the movie Her.

What does this mean for the cup of coffee that we’re still waiting for? We shall wait and see.